Exploring the opportunities and dangers of AI

Idaho legislative workgroup meets for first time to discuss the advancing technology

BOISE — Idaho lawmakers heard a broad overview of some of the dangers and benefits of the advancing technology of generative artificial intelligence.

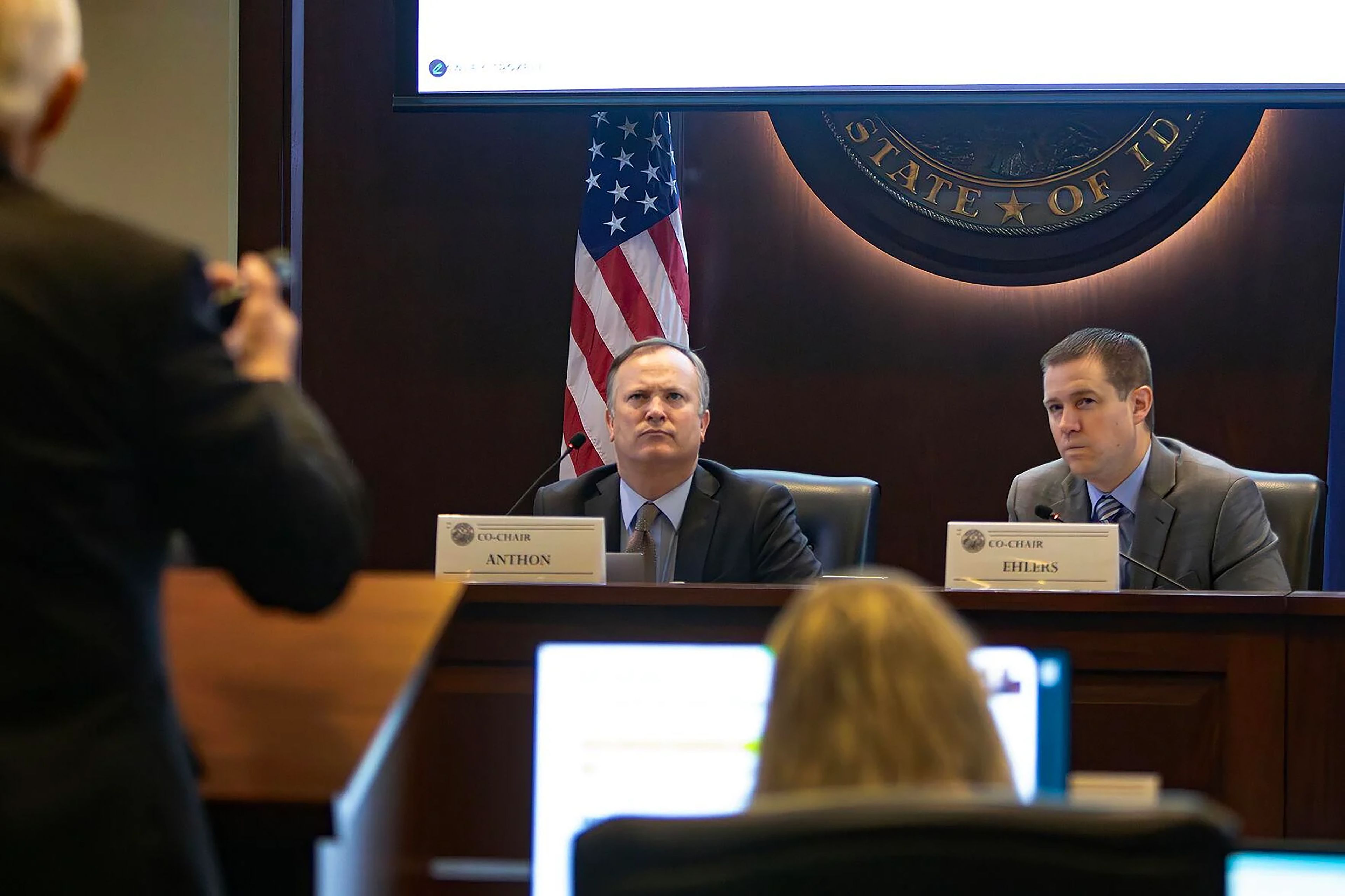

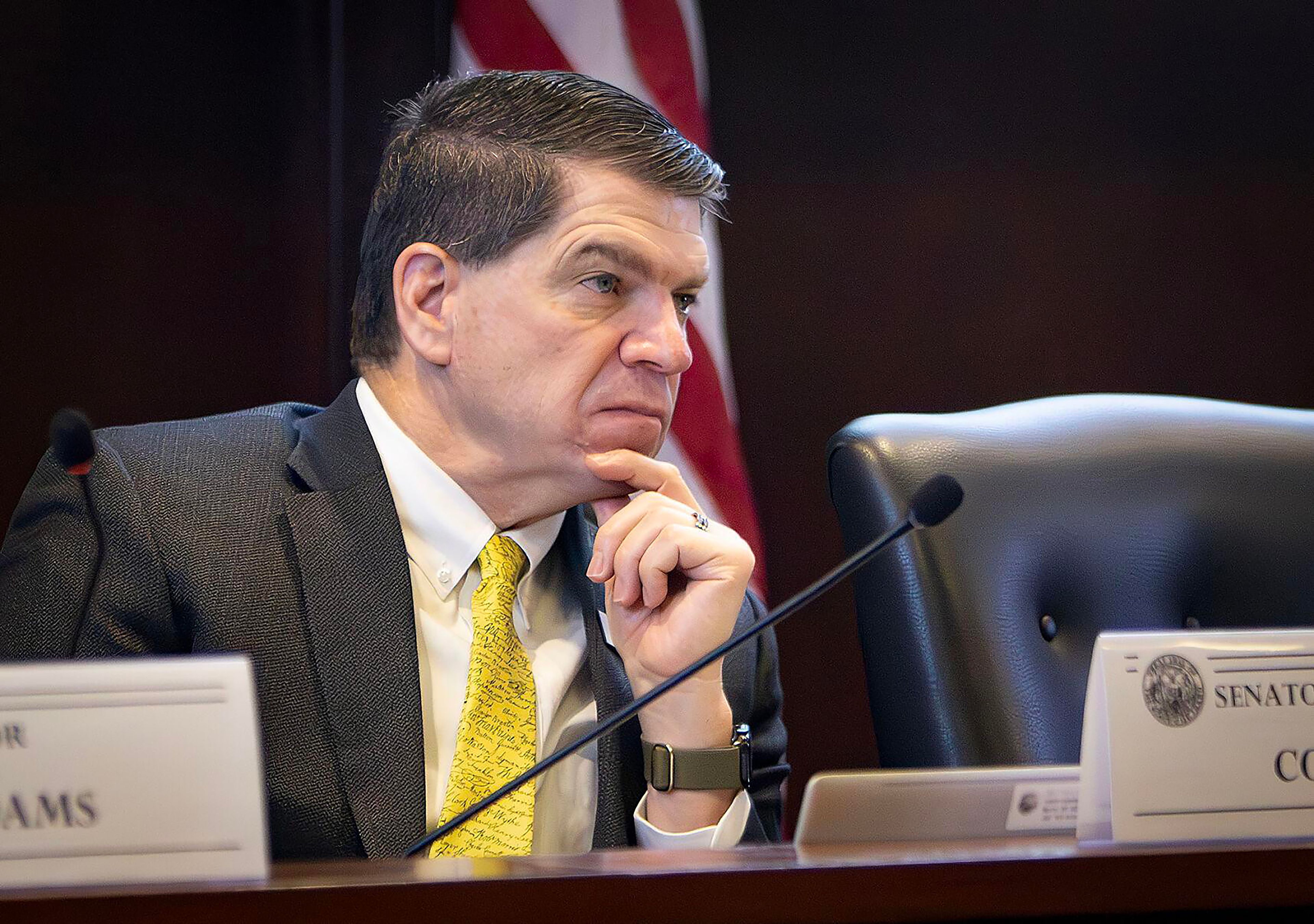

The Artificial Intelligence Working Group met Friday for the first time to begin what its co-chairperson, Sen. Kelly Anthon, R-Burley, said may be a “multiyear effort” to learn about and consider legislation regarding generative AI — a type of machine learning that can be trained to create text, images, audio, video and other content.

The group heard from Hawley Troxell partner Brad Frazer; Christopher Ritter, director of the Digital Innovation Center of Excellence and manager for digital engineering at Idaho National Laboratory; and Erick Herring, chief technology officer at VYNYL, a technology consultancy company with a presence in Idaho.

The goal of the working group is to, “understand existing uses of AI within the state, and then determining what state policies or laws should be around AI,” said co-Chairperson Rep. Jeff Ehlers, R-Meridian.

Frazer is an attorney who focuses on intellectual property and information technology and is chairperson of Hawley Troxell’s Intellectual Property & Internet practice group. He overviewed the current legal landscape around AI, which is changing frequently amid many ongoing court cases.

While there are benefits, Frazer said, there are a number of “pitfalls” and “perils” to using AI, including the fact that it’s difficult to legally own or sell generative AI output, know how it’s trained and ensure that it’s not trained with data that’s legally protected.

“If we’re going to use AI, let us be aware of that legal environment,” Frazer told lawmakers. “Let us not glibly embrace these tools, thinking that it’s a panacea because, in my opinion, from a legal perspective, there are things we have to fix or address.”

A potential pitfall is that generative AI can use copyright-protected data to generate an image or content that could be an infringement. His example was that he had asked an AI model to generate an image of “a scary clown living in a sewer that captures children,” and despite no mention of the film “It,” the clown generated looked exactly like Pennywise from the Stephen King adaptation.

“If you were to innocently promulgate and use the image on the left,” Frazer said, noting his slide with the clown picture, “because you had AI spin it up, and you disseminate that image, you’re going to very likely get sued for copyright infringement.”

Some of the advice he gave for the state was to ensure that the data used to train an AI model has been licensed properly and is correct, to create and review AI usage policies for employees and contractors, and to review insurance policies.

Ritter discussed current and in-progress innovations that INL is using the technology for, as well as its advancements in the last few years.

“We see AI as absolutely critical to our mission and what we call applied energy, specifically for nuclear energy,” Ritter said.

In 2022, an early version of GPT, or generative pre-trained transformer, which is a large language model, scored with an average 29.6% accuracy on Ph.D. science questions. The latest model scored at 78%.

These tools require a lot of computing power, which is driving up the energy need in the U.S., Ritter said.

“The level of computing we need to do AI training is basically unprecedented,” he said. “Some are talking about a gigawatt scale, so think about a full nuclear power plant just dedicated to a data center, 100% of the time.”

He said China was ahead of the U.S. in investing in the nuclear energy infrastructure, in part to support AI.

The national lab is researching and developing ways to use the technology to reduce design and deployment timelines, drive and accelerate experimentation and testing, automize operations, and maintenance and use to reduce risk and resilience.

Ritter urged lawmakers to encourage more information about these technologies in schools, both at universities and before that.

He thinks the state should be “pushing more AI use at that earlier level of education, so that when they come to Boise State, they already know these tools, and then hopefully they’re challenging our professor there, right?”

Herring, with VYNYL, also said AI should be integrated into education so students are becoming familiar with the tools.

He noted a number of areas the technology could or is already being used, such as in transportation, health care, employment and public safety. However, amid all the opportunities for improvements, there are dangers to consider, such as the tool is limited by the data it has available. The models are sensitive to biases within the data set. And technology used to create realistic “deep-fakes” is widely available.

He dissuaded regulation of use of the tool, but suggested increasing education of how these might affect people.

“The algorithmic feed on TikTok is quite dangerous,” Herring said. “If you don’t know what you’re consuming, if you don’t have a conscious understanding of what’ you’re consuming, which most people do not, then it is easy to be intentionally or even unintentionally manipulated.”

Friday’s three-hour meeting concluded with a closing statement prepared by the popular AI tool ChatGPT that Ehlers prompted, and Anthon telling members he asked AI to generate an image of him listening to the speakers during the meeting.

“It drew me with an earring,” he said.

Guido covers Idaho politics for the Lewiston Tribune, Moscow-Pullman Daily News and Idaho Press of Nampa. She may be contacted at lguido@idahopress.com and can be found on Twitter @EyeOnBoiseGuido.